How Google AI Overviews Are Changing the PPC Game and How Google AI Overviews Are Revolutionizing the PPC Landscape. Discover how Google’s AI Overviews are transforming Pay-Per-Click (PPC) advertising strategies and what marketers need to know to adapt.

Introduction

In May 2023, Google introduced a major shift in how users interact with its search engine: AI Overviews. These AI-generated summaries appear at the top of Search Engine Results Pages (SERPs), aiming to provide users with quick, comprehensive answers. While hailed as a breakthrough in user experience, AI Overviews have raised pressing questions in the digital marketing world—especially in Pay-Per-Click (PPC) advertising.

Marketers are now asking: Are AI Overviews helping or hurting ad visibility? Will users still click on ads if AI already answers their queries? And how can PPC strategies evolve to remain effective?

This article explores how Google AI Overviews are changing the PPC game and what advertisers need to know to stay ahead.

What Are Google AI Overviews?

Google AI Overviews are AI-generated summaries that appear prominently at the top of some search results. These overviews are part of Google's Search Generative Experience (SGE), which uses large language models (like those behind ChatGPT and Gemini) to synthesize information from multiple web pages and deliver a summarized answer to the user's query.

Key features:

- AI-generated content at the top of SERPs

- Linked sources from which the content is drawn

- Dynamic, conversational, and context-aware responses

- Often occupies space previously filled by ads or featured snippets

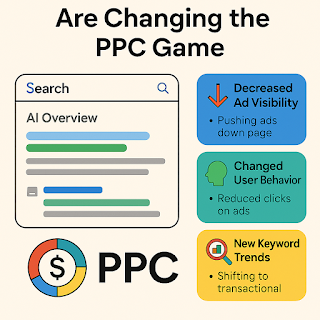

Why It Matters for PPC

PPC advertising, especially through Google Ads, relies on visibility. Ads that appear above or beside search results capture attention, drive clicks, and lead to conversions. AI Overviews, however, are now claiming premium real estate on the SERPs.

Here’s how this shift is impacting the PPC ecosystem:

1. Decreased Ad Visibility

AI Overviews often push traditional ad placements further down the page. This reduced visibility can mean:

- Lower Click-Through Rates (CTR)

- Higher Cost-Per-Click (CPC) due to increased competition for fewer visible spots

- Reduced Quality Score if ad engagement drops

2. Changed User Behavior

Users are increasingly satisfied with AI-generated summaries and may not feel the need to click further. According to an early SGE usability report, users clicked on 40% fewer links when an AI Overview was present. This could mean:

- Fewer opportunities for conversions

- More brand invisibility unless marketers adapt

3. New Keyword Trends and Query Types

AI Overviews often appear for long-tail, informational queries rather than transactional ones. This changes the keyword landscape for PPC:

- Informational keywords may be dominated by AI answers

- Transactional keywords still retain high ad competitiveness

- Marketers may need to redefine intent-based bidding strategies

How Marketers Are Adapting

Although AI Overviews present challenges, savvy marketers are finding ways to evolve.

1. Shifting Toward Bottom-of-Funnel Keywords

With AI Overviews handling many top-of-funnel (TOFU) questions, advertisers are:

- Doubling down on bottom-of-funnel (BOFU) and high-intent keywords like “buy,” “discount,” “near me”

- Using exact match and phrase match targeting to reach users ready to convert

- Avoiding informational keywords that AI Overviews dominate

2. Optimizing for AI Inclusion

Interestingly, some brands are working to get featured in the AI Overviews themselves:

- Creating high-quality, authoritative content

- Answering common questions in a concise, trustworthy format

- Implementing schema markup, FAQs, and clear headings This may not result in a direct PPC benefit but increases organic visibility, possibly supporting brand recognition alongside paid campaigns.

3. Using First-Party Data to Refine PPC

Since CTR data may be distorted by AI Overviews, marketers are increasingly turning to:

- First-party data from CRMs, apps, and customer databases

- Audience segmentation and remarketing based on behavior rather than search alone This improves targeting efficiency even when surface-level data like SERP clicks becomes less reliable.

Google's Mixed Messaging to Advertisers

Google has assured advertisers that AI Overviews won’t hurt PPC effectiveness, but the industry remains skeptical. Google Ads still brings in over 80% of Alphabet's revenue, so the company is unlikely to undermine it. However, some changes have already been noted:

- Fewer ad slots appearing on some overview-heavy pages

- Greater reliance on Performance Max and automation, making manual bidding less viable

- More emphasis on ad relevance and landing page quality due to tighter competition

In a sense, Google's message is: If you want to survive in the AI era, lean into automation and AI-powered ad tools.

New Opportunities Emerging from the Shift

While many fear reduced visibility, AI Overviews may also create new opportunities for PPC advertisers:

1. Smarter Search Ads with AI Extensions

Google is integrating AI-generated assets into ads themselves. With responsive search ads (RSAs) and AI-written headlines, advertisers can:

- Reach broader audiences with personalized content

- Automatically adjust messaging based on AI's interpretation of user intent This enhances performance even on pages dominated by AI Overviews.

2. Visual Search and Shopping Integration

AI Overviews also include visual responses and product carousels. Google is encouraging advertisers to:

- Use Product Listing Ads (PLAs)

- Integrate with Merchant Center and Google Shopping

- Submit high-quality images and product feeds These enhancements make ads more compelling in visually driven AI Overviews.

3. Voice and Conversational Commerce

As AI Overviews become more conversational, PPC may evolve into voice-driven advertising:

- Voice assistants using Google Search will pull from both AI and ads

- Smart brands are preparing voice-optimized copy

- Conversational CTAs (“Buy now,” “Schedule a call”) are being tested in AI-powered ad formats

Case Studies: Brands Reacting to AI Overviews

Case Study 1: eCommerce Fashion Brand

A mid-sized fashion retailer noticed a 25% drop in CTR for generic product keywords after AI Overviews rolled out. They responded by:

- Focusing PPC spend on branded and competitor keywords

- Enhancing Shopping ads with high-res product imagery and reviews

- Leveraging influencer-led content to appear in AI Overviews

Result: a 12% increase in conversion rate and better ROI.

Case Study 2: B2B SaaS Platform

A B2B software platform saw fewer leads from PPC for “best CRM software” queries. These now triggered AI Overviews. The company shifted strategy:

- Ran LinkedIn ads targeting decision-makers

- Created pillar blog content that appeared in AI Overviews

- Focused PPC on “demo,” “pricing,” and “comparison” keywords

Outcome: Cost per lead dropped by 20%, despite lower search CTRs.

Future Outlook: Where Is PPC Heading?

The introduction of AI Overviews signals a shift toward intent-first search powered by artificial intelligence. PPC isn’t dead—it’s evolving.

Predictions:

- AI-Powered PPC Tools will dominate: Google Ads will become more autonomous, with Performance Max and AI bidding as standard.

- Visual and Conversational Ads will rise: Expect ads embedded within AI Overviews, voice search results, and image-driven content.

- Greater Integration Between SEO and PPC: As AI controls visibility, brands will need a unified strategy that blends organic and paid efforts.

Conclusion

Google AI Overviews are undeniably changing the rules of the PPC game. For advertisers, this means less reliance on traditional keyword strategies and more focus on intent, audience behavior, and AI-powered tools. While ad visibility might shrink in some areas, new doors are opening—particularly for those who adapt quickly.

The key takeaway? Don't fight the AI shift—work with it. Marketers who align their strategies with AI behavior will find themselves not only surviving, but thriving in this new era of intelligent search.

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)